10 Controlled Access

Now that we understand a little more about the general challenges associated with state, we’re ready to look at the problem of controlled access to shared state. We mentioned in chapter 8 that there are risks if we have uncontrolled access to shared resources. One kind of risk was that we might somehow corrupt the data, and another kind of risk was that we might get “stuck” in some kind of deadlock or livelock. If we want to have well-behaved access to shared resources, how would we go about doing that? To explore this topic, we don’t want to start with thinking about human readers and books, because humans are very sophisticated. Instead we want to think about really simple mechanisms. The simpler we can make the mechanisms, the more readily we can use them.

So instead of trying to share a book, we’ll look in more detail at sharing a cell. We first encountered cells on the infinite tape of a Turing machine (chapter 3), and then we used them again as small pieces of mutable state that could have more than one name (chapter 9). A cell is just a slot where we can put a single small item of information—a single character like “w” or a single number like 1257.

We’re going to be a little vague about exactly how big a cell is, because it partly depends on how much ingenuity we use in implementing it. But by the scale of things that matter for everyday life, the contents of a cell are always pretty small: not really huge numbers, no more than a single character of text.

Lost Update

We’ll consider two very simple processes sharing a particular single cell. We’ll use the cell to hold a number, and each of the processes will update the number in that cell to be the value one larger than it was previously. That is, each process will take the following steps in sequence:

- 1. Read the value in the cell.

- 2. Figure out the new value by adding 1 to that existing value.

- 3. Write the new value into the cell.

So if we start with the value 0 in the cell, and we have two processes that each increment that value by 1, we’d expect to wind up with the value 0 + 1 + 1 = 2 in the cell. When people build and run little systems like this, it’s disturbingly easy to end with the value 1 in the cell, as though one of the processes had completely failed to do its job. This is a lost update problem, and it’s worth looking at the situation in a little more detail to understand how it can happen.

First, though, let’s consider why solving this problem is important. If we are only talking about these numbers unconnected to any particular meaning, we might not much care whether the answer is 1 or 2. But now let’s assume that the cell represents a bank balance, and that the discrepancy corresponds to a missing dollar that belongs to us. Now it starts to seem like more of an issue. Or if we assume that the number represents a geographic coordinate on a GPS system issuing us driving directions, or is the target of some oil-drilling crew, again the single-digit error starts to seem important in terms of causing large problems—taking a wrong road, drilling in the wrong field, all kinds of ugly situations we’d prefer to avoid. And in all of these cases it’s not likely to be much consolation if someone tries to reassure us that these problems don’t happen very often—indeed, it’s actually more of a problem that these lost updates happen unpredictably.

After all, if a failure occurs consistently, we can adapt to it. If we always get 1 instead of 2, we can just add one to the result consistently. Or if we always get 1 on Tuesdays, or when the moon is full, we can just add one to the result on those occasions. But if the failure occurs only occasionally, inconsistently, and unpredictably, then we don’t have any way to fix it. So instead we need to prevent it. To prevent it, we need to understand how it arises.

Two Processes, in Detail

Let’s think in terms of Alice and Bob acting out the steps of the two processes sharing the single cell. Although the two processes have the exact same steps, it’s helpful to be able to name every step of each process separately. So we’ll write A1 to mean “step 1 of Alice’s process.” We’ll also write “A1 → A2” (and say that as “A1 before A2”) to mean that step A1 always happens before A2.

Alice’s process can be summarized as

A1 → A2 → A3

which just means that A1 always happens before A2, and A2 in turn always happens before A3 … which is what we implicitly specified with the numbered list of steps that we used before. If we want to be clearer about what’s actually happening, we can replace these names like A1 with the action that’s happening:

Read cell to get x → compute x + 1 → write x + 1 into cell

Or we could summarize it like this:

Read → Compute → Write

Those are all alternative summaries of the single process that Alice is executing.

Here’s a summary of Bob’s process:

B1 → B2 → B3

So now we have Alice’s process and Bob’s process, three steps each, that we can write alongside each other:

A1 → A2 → A3

B1 → B2 → B3

Now we have all of the machinery in place, and we have a lost-update problem to solve.

Interleaving

To understand why lost updates can occur, we have to understand the interleaving of steps of the two processes: that is, what orderings are possible for the steps. This discussion of interleaving may be easier to follow if you actually make up a set of six index cards, one for each of the three steps in each of the two processes. Then you’ll be in a better position to arrange and rearrange them to see what’s possible and what’s not possible.

Before we go further, let’s ask a question in terms of these names: what is the always-happens-before relationship between A1 and B2?

It seems pretty easy to say that one possible relationship, B2 → A1 is not true. That is, we don’t have any trouble seeing that A1 can happen before B2. After all, Alice’s process is listed first on the page; step A1 is further to the left; step A1 is the first step of Alice’s process, while B2 is after the first step of Bob’s process. So there are lots of cues in this simple example suggesting that A1 can happen before B2, which in turn must mean that B2 always happening before A1 is not correct.

The trickier part is thinking through whether A1 → B2 is true. (Spoiler alert! It’s not.) In this case, all those same cues from the text are suggesting that of course A1 happens before B2. But we need to be careful to understand what it means to “take a step” in this kind of process.

How does step-taking really work? If we think of Alice and Bob as independent step-takers, then each of them simply takes steps at their own speed. They don’t need to be “fair” or “consistent” or “sensible” or anything like that. So we have to be careful not to assume that they have those kinds of constraints. Alice might take steps very quickly while Bob takes them very slowly, or vice versa, or they might go at roughly the same speed. When we consider the range of speeds each might have—instead of assuming that they are roughly the same—it becomes clearer that we can’t guarantee that A1 happens before B2.

Multiprocessing and Multiprogramming

When Alice and Bob each have the ability to take steps that are completely independent, computer scientists would call it multiprocessing. A different situation that’s also important to consider is where Alice and Bob are actually sharing a single underlying step-taking machine. A given step may be Alice’s, or it may be Bob’s, but each single step only advances one of them. If Alice is taking a step, Bob is not (and vice versa). We encountered this approach previously in chapter 8, where we called it timesharing or multitasking. Sometimes computer scientists use the term multiprogramming when comparing this approach to multiprocessing.

In the multiprogramming example, the actual “step-taker” is a third person, Chuck. We’ll modify the Alice-and-Bob example a little to make it clear how they can share a single step-taking machine. Chuck doesn’t do anything very interesting himself; he just picks who gets to take a step next. When Alice or Bob is ready to take a step, they raise their hand; Chuck picks the next one to take a step from among whoever happens to have a hand up. When the chosen one finishes their single step, Chuck picks again. Chuck never picks someone who doesn’t have his or her hand raised, but otherwise his choices are not predictable. Again, he doesn’t have to be “fair” or “consistent” or “sensible.” He won’t pick a process that’s not ready, but otherwise he can be as fair or unfair as he likes.

Since we can’t predict Chuck’s actions in general, we have to consider the possibility that he’ll choose Bob twice before he chooses Alice. If that happens, then B2 happens before A1, which in turn means that A1 → B2 isn’t true.

Some Example Interleavings

To summarize, we can say that there are some “inside-the-process” combinations that are always-happens-before relationships, but so far we haven’t found any such relationships across different processes. In general, the steps of the two processes may be interleaved in any way that preserves their inside-the-process ordering constraints. It’s a little like shuffling together two ordered decks of cards. A “good” shuffle is fair and the cards from the two sides alternate; but even if the shuffle is pretty bad and the interleaving isn’t very even, it’s still a shuffle and we still use the result. The combined deck will have unpredictable sequences of left-hand cards vs. right-hand cards—but the riffling together doesn’t change the order of any of the cards within the left-hand deck, or the order of the cards within the right-hand deck.

Returning to our simple example, there are several equally possible orderings, like “all Alice first” or “all Bob first” or “alternating steps” (and several others as well). Here’s what some of those orderings look like:

• All Alice first: A1, A2, A3, B1, B2, B3

• All Bob first: B1, B2, B3, A1, A2, A3

• Alternating: A1, B1, A2, B2, A3, B3

Now recall that we started down this path of looking at interleavings so that we could understand the lost-update problem. To get there, let’s focus on contrasting two of these, the “all Alice first” interleaving and the “alternating” interleaving.

To do that comparison, we’re going to switch around the way we write out the process steps. The processes will still be the same, but different notation helps make different aspects clear—just like 0.25 and 1/4 are different ways of writing the same fraction, and we could use whichever one makes a problem easier to solve. With our previous “A1, B2” notation we’ve been emphasizing that there are two processes A and B while being a little fuzzy about what is happening in each of those different steps. To be clear about the cause of the lost write, we effectively have to turn our notation inside out. Instead of emphasizing the processes and downplaying the specifics of each step, we’ll now emphasize each step’s meaning, with just enough information about each process so we can tell them apart. So now instead of calling a particular step “A1” we’ll call that exact same step “ReadA.”

Where does that notation come from? We summarized these processes previously as the three steps Read, Compute, Write. Recall that Read reads the value from cell x, Compute figures out a value for x + 1, and Write writes that value back into the cell x. Both processes A and B have identical steps, so we use a subscript to keep track of which process is doing each step: “ReadA” is Alice’s process doing a Read, while “ReadB” is the comparable Read step … but in Bob’s process.

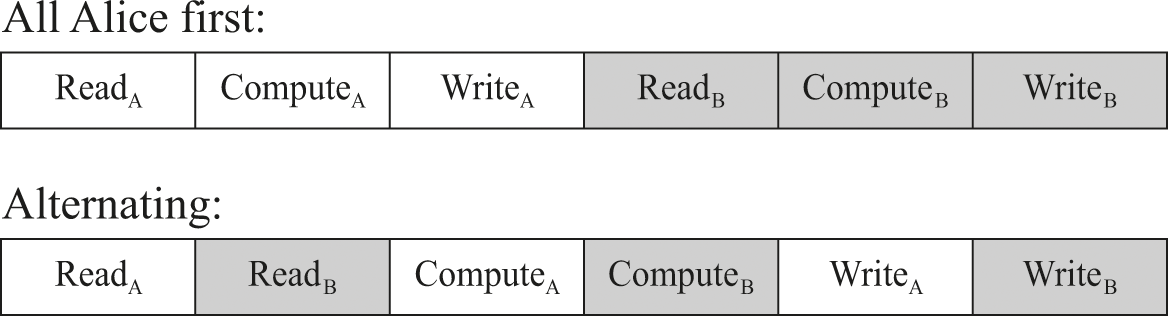

Using this new notation, figure 10.1 shows what’s happening in those two different interleavings.

Two different interleavings of the same steps.

Let’s focus on the two Read steps, ReadA and ReadB. In the upper (Alice-first) interleaving, ReadA and ReadB read different values for x. ReadB reads x after it has been updated with a new value (increased by one) that was computed by Alice’s process. That arrangement gives the correct final result, in which x has been incremented by 2.

But in the lower (alternating) interleaving, ReadA and ReadB both read the exact same value for x. ReadB reads x the very next step after ReadA has read x—so Alice’s process hasn’t yet had a chance to update x. That arrangement causes a lost update, in which x only gets incremented by 1.

Each process did all the right steps so that each one would increment the value. But because of the way those steps were interleaved, we’ve unfortunately lost the effect of one of the incrementings.

Is This a Real Problem?

If we think about Alice and Bob as people carrying this out with pencil and paper, this lost-update problem seems pretty unlikely. Alice and Bob would be aware of each other’s presence, and the piece of paper itself would serve as a kind of coordinating mechanism. They’re not really going to blindly compute the same value and then have the second person’s result overwrite the first person’s result. So this all may look a little contrived.

It may help to think about an “idiot” version in which neither person has an ounce of common sense and is just exactly following a list of instructions, without any higher-level thinking about what’s to be accomplished. It’s easy to think that this is such an easily avoided problem, so how can it be a real concern? But our computers are indeed just dogged step-takers, performing their given instructions without any common sense or higher-level reasoning. So we have to be concerned about how to give them the exact right instructions to avoid this sort of error.

Is it possible to prevent the problem of lost updates? As we’ve described the arrangement so far, any interleaving can happen. Instead, we’d like to arrange the system so that only certain interleavings can happen—specifically, only those that give the correct answer.

In our small example, we know we could get the correct answer by arranging to only run one process at a time—and indeed, that’s always a worst-case solution that is available to us. In principle, we can always avoid the problems that can arise from multiple processes with shared data. One possibility is that we simply execute only one process, to completion, at a time. Another possibility is that we eliminate sharing by giving each process its own copy of all data. But those solutions seem kind of drastic, and probably not workable in general. For example, if Google search were a service that could only perform one search at a time for one user at a time, it’s hard to see how it could have become a valuable resource for millions of users on the web. Eliminating multiprocess behavior or eliminating sharing would be running away from the lost-update problem, not solving it.

Mutual Exclusion

Our problem here is concurrent access—access by multiple executing processes. We need to build something to briefly limit concurrent access to the cell x, long enough to stay out of trouble. And more broadly, we need to have such a mechanism not only for cell x but also for shared state in general. This specific problem is what computer scientists call mutual exclusion: “exclusion” meaning that a process can somehow keep other processes away from shared data, and “mutual” in that any single process can use similar mechanisms to exclude other processes.

Let’s imagine a simple mutual exclusion mechanism that would eliminate our lost-update problem. We’ll call the mechanism a lock. Somehow—we won’t worry about the details—the mechanism ensures that only one process at a time is holding the lock. There are two things a process can do with a lock: “Acquire” and “Release.” After a successful Acquire operation, the executing process is holding the lock. After a successful Release operation, the executing process is no longer holding the lock.

Despite the name, this lock is not very much like a household lock on a front door or a gym locker. It’s not really trying to keep out burglars and thieves, nor does it have a special key or combination that lets only certain people open it. Later in the book we’ll see some computational mechanisms that do have keys and are about enforcing security of various kinds, but that’s not what these locks accomplish. These locks are just about limiting the ways that concurrent processes share state.

Fans of espionage may be happier with an analogy to a “dead drop.” That’s a way for a spy to pass information to the spy’s handler (or vice versa) while never having the two parties in the same place at the same time. The sending party leaves the information at a designated place and the receiving party picks up the information at that designated place, with some signaling or timing constraint to ensure they are not both there at the same time. Either the spy has possession of the information, or the handler has possession of the information, but the spy never hands the information directly to the handler or vice versa. With the dead drop, spy and handler have effectively implemented a form of mutual exclusion on the shared data.

Using a Lock

We can tweak the Alice and Bob processes to use Acquire and Release. The only changes we’ll make are to introduce locks that work as described and to use those locks to avoid lost updates. Our goal is to ensure that every possible interleaving of their steps will end with x incremented by 2 (which is the correct result). Just as the previous version using uncontrolled processes, both the Alice and Bob processes will contain identical steps—but now those identical steps include a couple of operations on locks:

- 1. Acquire Lockx

- 2. Read x

- 3. Compute x + 1

- 4. Write x

- 5. Release Lockx

The heart of the process is still the exact same Read-Compute-Write steps we had before. But we have effectively wrapped those steps inside an Acquire-Release pair. Acquire and Release are a little like left and right parentheses: they have to match, or else there’s some kind of a problem.

What progress have we actually made? The crucial new capability here is that we can have confidence in the result when we allow the two processes to run concurrently. The lock lets concurrency be dynamically restricted, only in the places where it matters. We didn’t have to pick in advance whether process A or B would run on its own, nor did we have to decide in advance which one would run ahead of the other. If the processes didn’t have any shared resources, they can run to completion in parallel with no sequencing at all between them. Locks are a simple mechanism to control concurrent access dynamically. Once we have this simple mutual exclusion problem under control, it becomes possible to build much larger, more complex systems with concurrency and shared state.